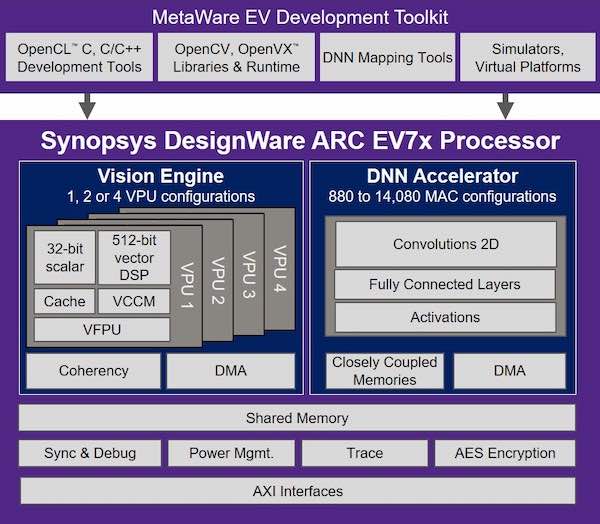

Synopsys' ARC Embedded Vision Processors Delivers Industry-Leading 35 TOPS Performance for AI | Maker Pro

Mipsology Zebra on Xilinx FPGA Beats GPUs, ASICs for ML Inference Efficiency - Embedded Computing Design

Electronics | Free Full-Text | Accelerating Neural Network Inference on FPGA-Based Platforms—A Survey

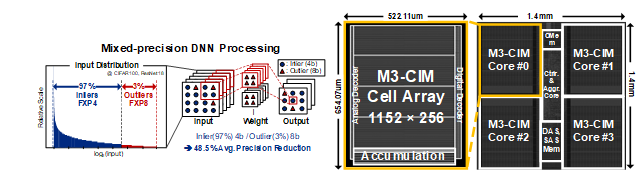

A 161.6 TOPS/W Mixed-mode Computing-in-Memory Processor for Energy-Efficient Mixed-Precision Deep Neural Networks (유회준교수 연구실) - KAIST 전기 및 전자공학부

![VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture](https://t1.daumcdn.net/cfile/tistory/993DBA3E5BAF92CE38)

VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture

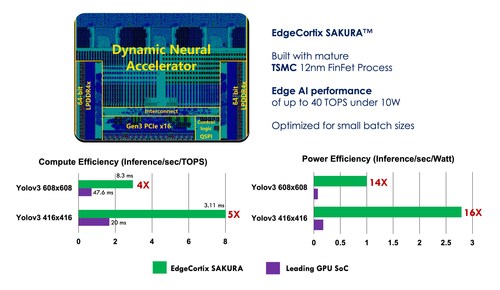

EdgeCortix Announces Sakura AI Co-Processor Delivering Industry Leading Low-Latency and Energy-Efficiency | EdgeCortix

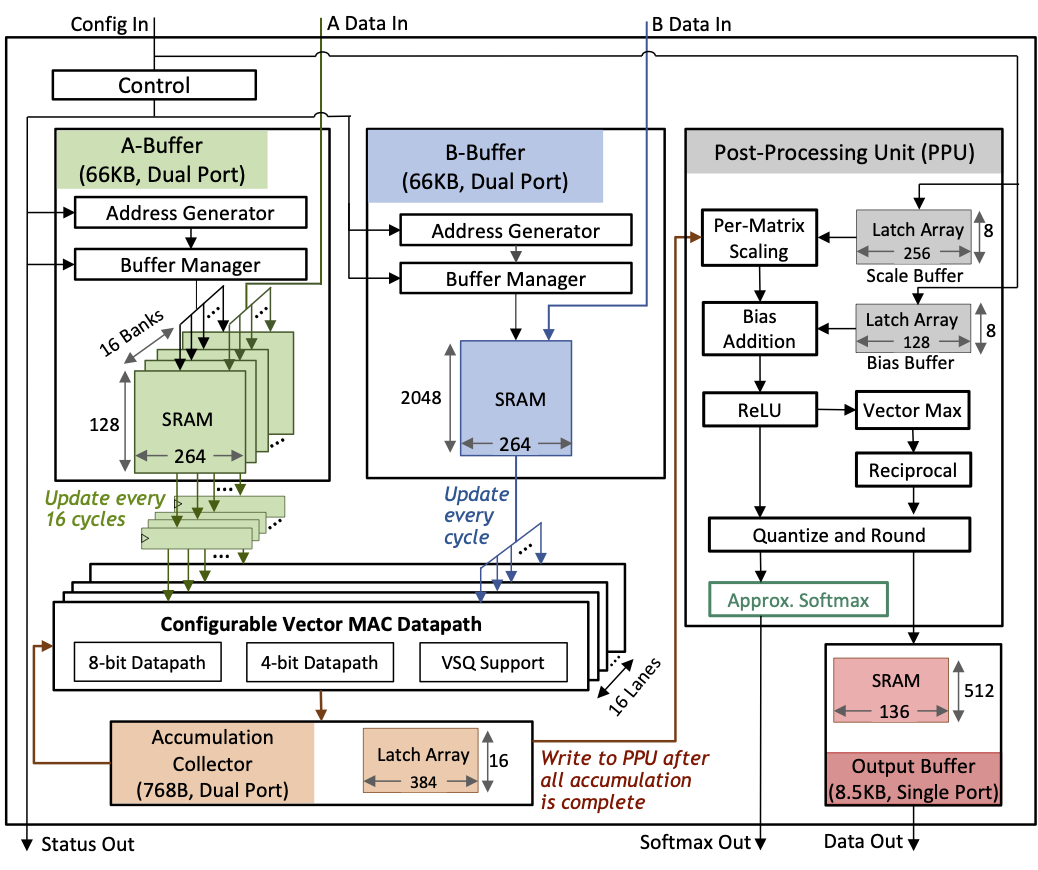

A 17–95.6 TOPS/W Deep Learning Inference Accelerator with Per-Vector Scaled 4-bit Quantization for Transformers in 5nm | Research

![PDF] A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar PDF] A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/a2e283532b71e9b6af7addb3b3f4f4a1af6e0fb4/2-Figure1-1.png)

PDF] A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar

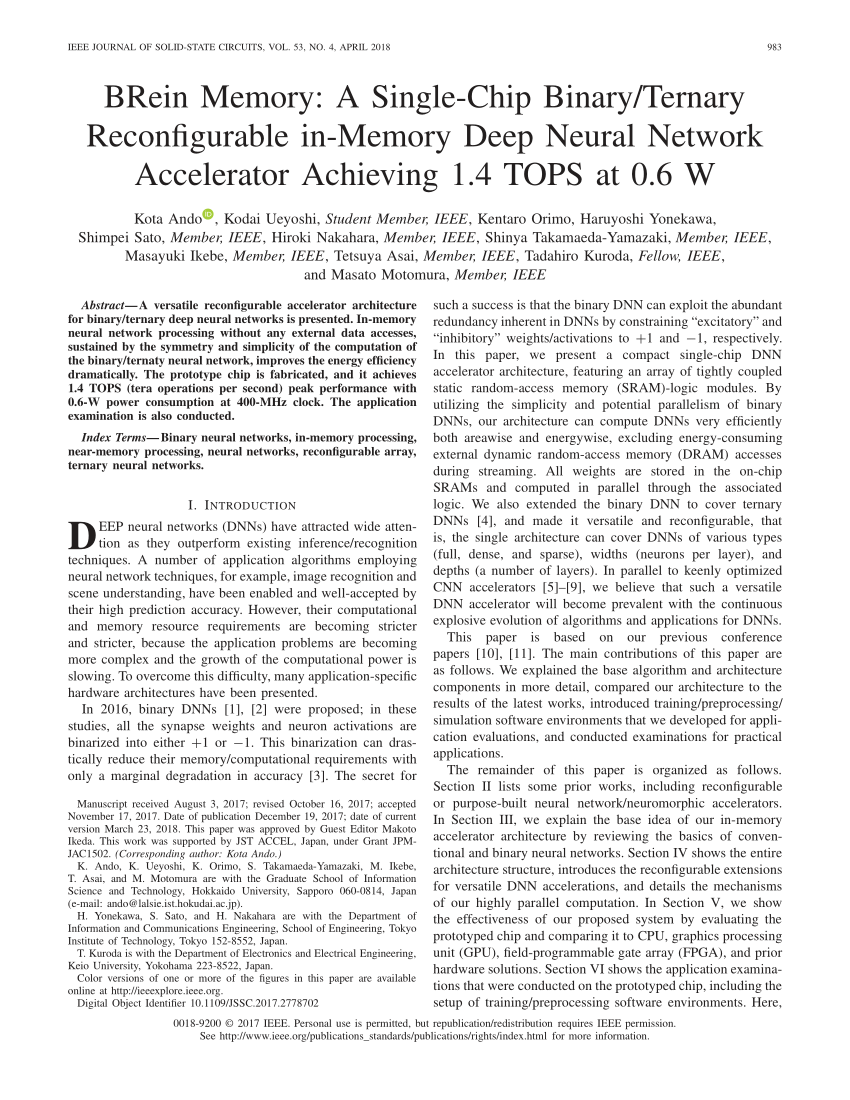

PDF) BRein Memory: A Single-Chip Binary/Ternary Reconfigurable in-Memory Deep Neural Network Accelerator Achieving 1.4 TOPS at 0.6 W